dbt (Data Build Tool) ¶

A little bit about me ¶

- Data Analyst @ Autodesk

- You can find me at RaulingAverage.dev

- Enjoy Coffee, Learning, and Running..near the beach

Notes:

I am not a core-contributor to the dbt project, but a mere user

I hope you and your families are safe and healhty during these times! Moreover, I encourage y’all to Social Distance, Wear Masks, and support social-movements.

What will we be talking about today? ¶

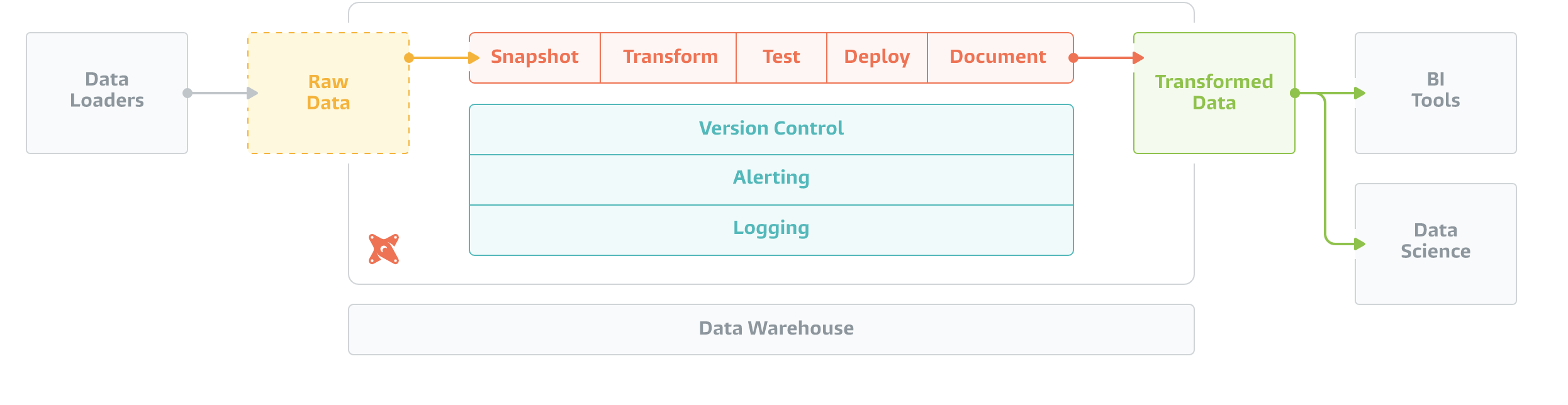

dbt, Data Build Tool, is an open-source package developed by Fishtown Analytics, that applies the principles of software engineering to analytics queries, an approach that dramatically increases your leverage as a Data Professional

Mainly, dbt helps in creating positive analytics engineering in the Transformation in ELT (Extract, Load, Transform)

Source

Source

Benefits of using dbt are:

- Version Control Data Models

- Table Snapshots & Table Materialization

- Documentation

- Templating & User Defined Functions (UDF)

Jinja Statements <3

- Tests

SQL Assertions or other SQL-related testing

SQL Assertions or other SQL-related testing - Logging

- Alerting

And more!

Consideration on using dbt as a part of your Analytics Engineering ¶

We have this fake data ingested from a fake API Pizza-is-Cool-blog.com.

[{

"id": 1,

"first_name": "Reamonn",

"last_name": "Dreier",

"email": "rdreier0@tinyurl.fake",

"gender": "Male",

"ip_address": "34.14.555.55"

}, {

"id": 2,

"first_name": "Ulric",

"last_name": "Skarr",

"email": "uskarr1@csmonitor.yahoo",

"gender": "Male",

"ip_address": "Unknown"

}, {

"id": 3,

"first_name": "Ransom",

"last_name": "Doe",

"email": "rspeirs2@usgs.nah",

"gender": "Male",

"ip_address": 'N/A'

}]

We may want to do some cleaning/transformations within our extraction. (Of the older ETL philosophy)

#PseudoCode.

# Note: Also pretend we extracted the data in another script or unseen function call.

# import json

# pizzaJson = json.loads('Resources/Pizza/Is/Cool/Fam/api_pizza.json)

### Etc cleaning, removing corner cases....

### Blah....

# Then use another script/function to push data to database

However, what happens if we want to retroactively see what occurred in the (pure) raw data? We may not have that historical data, as we did some preprocessing....

However, let's focus on ingesting that raw data, and pushing it to the Transformation step (Newer ELT philosophy) using dbt

-- Raw data in base_pizza_users_logins.sql file

WITH source AS (

SELECT * FROM {{ source('API_PIZZA', 'Logins')}}

)

SELECT * FROM source

Next, we clean up the data for pre-reporting data model creation

-- Next layer of data model called staging_pizza_user_logins.sql

WITH raw_source AS (

SELECT

id AS user_id,

first_name,

last_name,

email,

gender,

CASE

WHEN ip_address = 'Unknown' OR ip_address = 'N/A'

THEN NULL

ELSE ip_address

END AS ip_address

FROM {{ ref('base_pizza_users_logins')}}

)

SELECT * FROM raw_source

Run the dbt cloud or dbt CLI

terminal

dbt run --model +staging_pizza_user_logins Again, there are other powerful dbt features ¶

But I'll end this as a cliffhanger...until next time ¶

- Check out the Fishtown Analytics dbt Documentation

- dbt has an awesome Slack community - community.getdbt.com

- dbt may be "Data Build Tool", but if you want to talk more about

doing more tobuildtables...